The analysis of HEAT Track data that underpins this case study has also been written up as a peer reviewed article published in the Widening Participation and Lifelong Learning Journal.

Canterbury Christ Church University (CCCU) have used data provided by HEAT to examine the impact of their intensive Inspiring Minds programme on Key Stage 4 (GCSE) exam attainment. Inspiring Minds is a Science, Technology, Engineering & Maths (STEM) activity delivered to Year 10 pupils who attend six Saturday sessions of between four to six hours.

Canterbury Christ Church University (CCCU) have used data provided by HEAT to examine the impact of their intensive Inspiring Minds programme on Key Stage 4 (GCSE) exam attainment. Inspiring Minds is a Science, Technology, Engineering & Maths (STEM) activity delivered to Year 10 pupils who attend six Saturday sessions of between four to six hours.

It is possible for all HEAT member organisations to apply the approach taken in this evaluation to their own activities; the data and tools used are available to all HEAT members. The evaluation design followed here may provide a useful model for other similar outreach activities, i.e. heavily targeted activities that are not over-subscribed and so a comparison group is not easily available. The full evaluation report is available to HEAT members on File Store.

Inspiring Minds

Inspiring Minds has been designed by leading academics from CCCU’s School of Teacher Education. It is based in ground-breaking pedagogy and aims to enable students to not only understand their school curriculum but also develop a rich and deep understanding of the nature and interactions between science and their other subjects. This is achieved through Informal Science Learning (ISL), with more information on this approach available on the Inspiring Minds website.

Inspiring Minds has been designed by leading academics from CCCU’s School of Teacher Education. It is based in ground-breaking pedagogy and aims to enable students to not only understand their school curriculum but also develop a rich and deep understanding of the nature and interactions between science and their other subjects. This is achieved through Informal Science Learning (ISL), with more information on this approach available on the Inspiring Minds website.

Inspiring Minds is carefully targeted, offered to schools with high proportions of Uni Connect target students and low levels of science capital. 90% of participants are from target wards. Evaluation based on surveys and semi-structured interviews has already shown increases in intentions to study STEM post-16 and intentions to attend university. The full report can be found here.

Although these findings are positive, in many cases these intentions cannot be realised unless the required Key Stage 4 exam grades have been achieved. Owing to the pedagogical research underpinning the design of the activity, the causal mechanism is in place for the activity to raise attainment, particularly in Science and Maths due to the STEM subject nature. A Theory of Change detailing this mechanism was created in HEAT’s Evaluation Plans Tool (members only) to be recorded on the database alongside the activity record. However, as with all impact evaluations, the challenge is to provide evidence of the causal relationship between participating in the activity and the outcome in question: improved Key Stage 4 exam attainment.

Evaluation Design

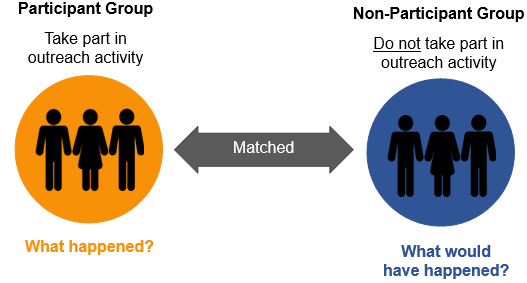

The evaluation drew on a quasi-experimental design, whereby participants of Inspiring Minds were matched to non-participants based on confounding variables known to influence Key Stage 4 attainment. Evaluation designs incorporating matching techniques such as this can reach Type 3 standards of evidence according to the Office for Students’ report (OfS, 2019), with this type considered capable of establishing a causal impact. However, we are critical of our work here at HEAT and reflection of the limitations associated with this evaluation led us to deem it a strong Type 2 evaluation. We have plans in place to raise the standard in the next evaluation cycle (see Next Steps).

The evaluation drew on a quasi-experimental design, whereby participants of Inspiring Minds were matched to non-participants based on confounding variables known to influence Key Stage 4 attainment. Evaluation designs incorporating matching techniques such as this can reach Type 3 standards of evidence according to the Office for Students’ report (OfS, 2019), with this type considered capable of establishing a causal impact. However, we are critical of our work here at HEAT and reflection of the limitations associated with this evaluation led us to deem it a strong Type 2 evaluation. We have plans in place to raise the standard in the next evaluation cycle (see Next Steps).

As is the case with most educational interventions, the Inspiring Minds participant group has already been established. The challenge, therefore, is to find or create a comparison group that is as similar as possible in terms of characteristics.

Students from the participant group were matched with students from a non-participant group. Data for a large population of non-participants was available thanks to the Kent and Medway Progression Federation’s extensive baselining of schools.

Participants were matched to non-participants based on their gender, ethnicity, whether they were first in their family to attend university, their IMD and IDACI Decile and the average attainment of the schools they attended. Measures were also taken to ensure the prior attainment at Key Stage 2 and motivation levels of participants were as similar as possible to those of the non-participants with whom their outcomes were being compared.

HEAT Database Coding

The Inspiring Minds programme already existed as a Programme Title on HEAT to which participants were registered. Matched non-participants were then registered to the same activity record so that they were registered alongside participants. Unlike participants, however, the Evaluation Group for these non-participating students was set to ‘Comparison’. Coding the data in this way ensured that the Key Stage 4 attainment for these ‘Comparison’ students would be reported separately from ‘Participants’ in HEAT’s Key Stage 4 dashboard.

Results

The analysis found that participants of Inspiring Minds achieved higher Attainment 8 scores than the matched group of non-participants. Participants achieved an average of 6 grades higher across eight core subjects. This result was significant at p<.10.

The analysis found that participants of Inspiring Minds achieved higher Attainment 8 scores than the matched group of non-participants. Participants achieved an average of 6 grades higher across eight core subjects. This result was significant at p<.10.

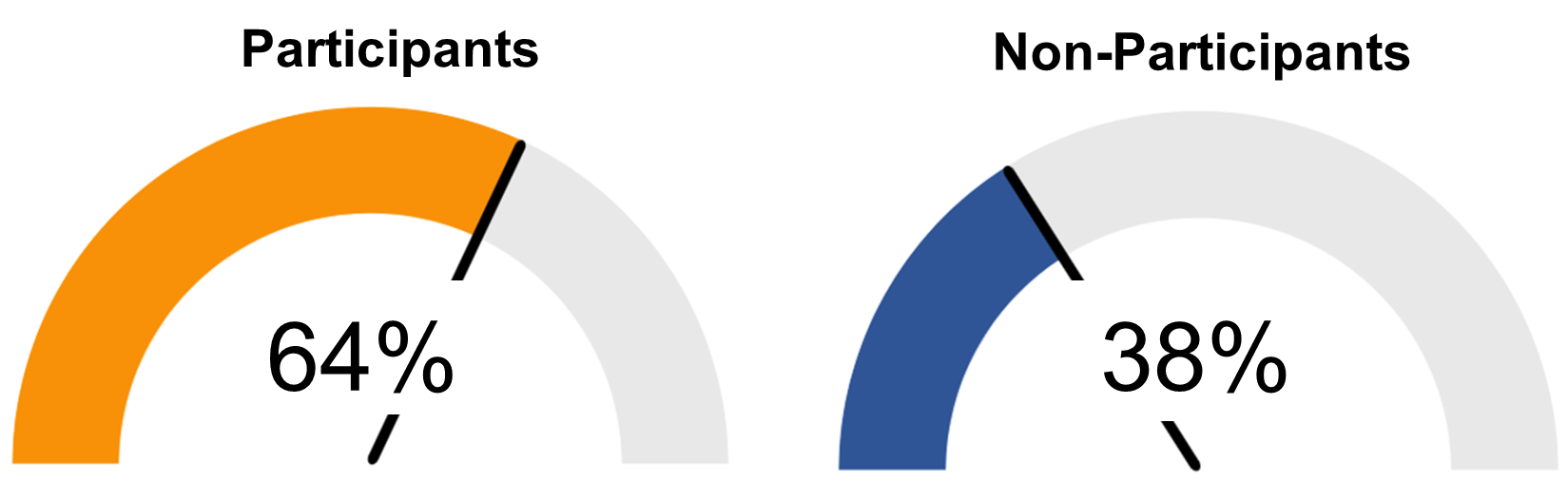

The analysis found that participants of Inspiring Minds were +26 percentage points more likely to achieve a 9 to 4 pass in Maths (64%) than the non-participant group (38%). This result was significant at p<.05.

Participants of Inspiring Minds were +7 percentage points more likely to achieve a 9 to 4 pass in Science (32%) than the non-participant group (24%), although this result was not statistically significant. However, the relatively low participant counts in this evaluation made achieving statistical significance difficult. Therefore, we would caution against assuming that the lack of statistical significance means there was no impact on Science grades.

Next Steps

The evaluation followed here is retrospective; a comparison group of non-participants was constructed after Inspiring Minds had been delivered. This was possible thanks to the extensive data collection carried out under KMPF’s school baselining. Although this provided rich data for matching based on the variables listed above, it was not possible to guarantee that participants and non-participants were equally likely to participate in Inspiring Minds. This ‘selection bias’ can mean that the groups differ in their underlying motivation towards their education.

In light of this, the next step for this evaluation is to prospectively select, and collect data for a carefully chosen group of non-participants with whom to compare the outcomes of the participant group. Prospective evaluations are designed before the activity is implemented and are generally thought to provide stronger and more credible results. When identifying both groups, selection approaches will be consistent and baseline attitudinal data will be collected to ensure groups are similar in terms of motivation towards their education.